Table of Contents

- What is Affective Computing?

- What is Emotion Artificial Intelligence?

- What Are the Applications of Emotion Artificial Intelligence?

- How to Use Emotion Artificial Intelligence in Education

- How the Outbreak of COVID-19 Helped Achieve Progress in Emotion AI

- What Are the Ethical Concerns of Emotional Artificial Intelligence?

- Some Final Thoughts On Emotional AI

Although there are efforts dedicated to it, emotion recognition is not quite as mainstream as some other applications of Artificial Intelligence. But this may soon change. While still treated as a niche application, the ability to accurately recognize human emotions has tremendous implications in all aspects of human-AI interaction, and, as you'll see, can even help humans better perform their jobs.

The idea of machines understanding your emotions may seem foreign since, more often than not, humans generally struggle to understand how others feel, too. The growing emotional distance between people has recently become more apparent. Over the years, numerous technological advances have made us less dependent on our social skills, which in effect hid the degradation of human interaction that has been happening for quite some time.

The outbreak of COVID-19 shined a light on problems that were hiding in plain sight for years. The education industry, especially, has had its shortcomings revealed: the difference between good educators and not-so-good educators has never before been more evident. With most lessons being held over Zoom, Skype, or other similar platforms, knowing whether students are focused and interested in the material taught and whether they understand what is being explained has never been harder than now. So how exactly does emotion recognition factor into all of this?

What is Affective Computing?

The whole idea of analyzing human emotions originates from the field of affective computing. There are many definitions for affective computing, but the most commonly used one is from "Affective Computing: A review", authored by Jianhua Tao and Tieniu Tan in 2005:

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science.

As technology progressed, it has become evident that it is equally important to understand the emotive channels of human interaction as it is to understand the cognitive channels. As a subset of affective computing, emotion recognition aims to do just that. Detecting, filtering, and interpreting verbal and non-verbal communication is essential to understanding how someone feels.

Emotions are, by their very nature, intangible, but the way we present them isn't. We are all conditioned from a young age to search for and analyze verbal and non-verbal communication - we just do it subconsciously. Many clues show us how somebody feels: the look on their face, the gestures they use, their voice, etc. So how does Emotion AI work?

What is Emotion Artificial Intelligence?

To understand the role that Emotion AI can play in the education industry one must first understand, at the very least on a basic level, what Emotion AI covers. Emotion AI can be separated into two components:

- Emotion Recognition - building a repository of emotion responses

- Emotional Response - applying knowledge gained through emotion recognition

Although as humans we provide many clues betraying our emotions, for the education industry the easiest thing to focus on are facial expressions, sounds, and gestures.

1. Face Analysis

There is an almost limitless number of facial expressions, but for simplification, universally recognized emotions are categorized into six categories:

- Anger

- Happiness

- Surprise

- Disgust

- Sadness

- Fear

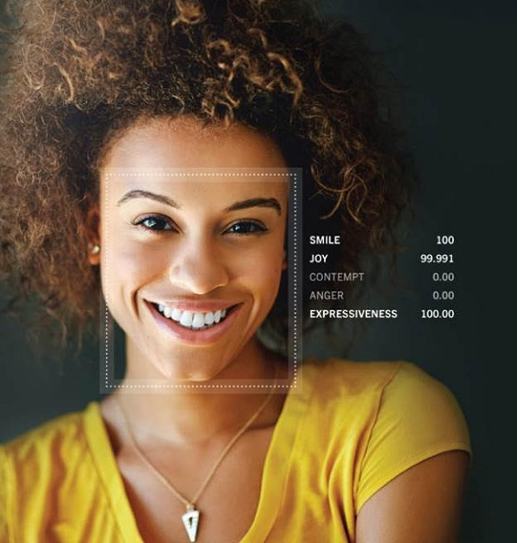

Machine learning models used for emotional analysis have started including other parameters such as whether or not a person is smiling, how expressive a face is, etc. They deduce how a given person feels not necessarily by placing them into one of these large emotional categories (angry, happy, surprised), but usually by assigning them a combination of these emotions.

Image credit: AI Trends

This allows you to fairly accurately predict how someone feels at any given moment. However, facial analysis is still somewhat limited and is not by itself enough to properly understand how someone feels. Voice and gesture analysis can help.

Article continues below

Want to learn more? Check out some of our courses:

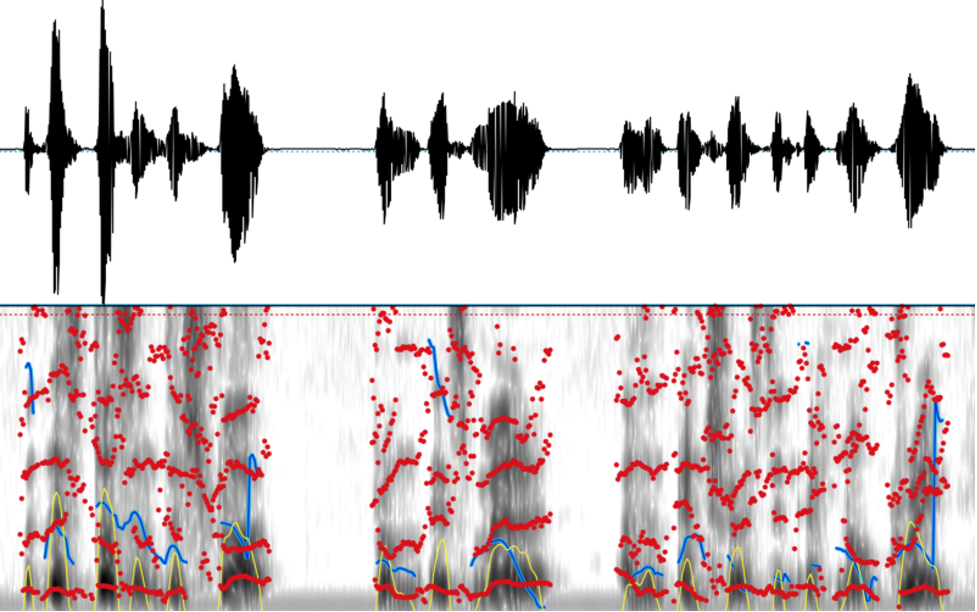

2. Voice Analysis

A typical way to analyze human voice uses multiple different metrics, each treated as a separate "emotional measure." Those metrics can be, for example:

- Valence - voice affectivity

- Activation - engagement of the speaker (alertness, passiveness)

- Dominance - does the speaker control the situation

In standard benchmark datasets like IEMOCAP, machine learning models are already outperforming humans in speech emotion detection. A model such as the DANN model, which was proposed in the Domain adversarial learning for emotion recognition article published in 2020 and authored by Zheng Lian, Jianhua Tao, Bin Liu, and Jian Huang, manages to achieve an accuracy of over 80%.

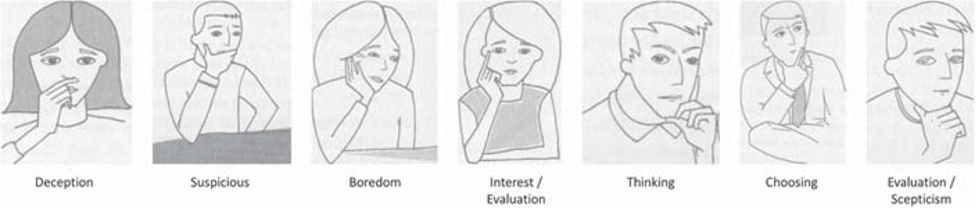

3. Gesture Analysis

Finally, I must not forget gestures. Gestures seem to play a big part in how efficiently you communicate your emotions and how well you understand the emotions of others. Because of social distancing, most meetings and lectures take place online, which hinders our ability to use gestures as a means of communication. To emphasize how limiting that is, you can look at many different research articles written about the importance of gestures.

For example, in Associating Facial Expressions and Upper-Body Gestures with Learning Tasks for Enhancing Intelligent Tutoring Systems (authored by Ardhendu Behera, Peter Matthew, Alexander Keidel, Peter Vangorp, Hui Fang & Susan Canning, published in 2020), it was suggested that understanding emotions when both face and body are involved is 35% more accurate than when involving only the face alone. The authors of the article also proposed using a deep learning model that analyzes HoF (Hand-over-Face) gestures and other non-verbal behavior.

Image Source: Ardhendu Behera et al., Associating Facial Expressions and upper-body gestures with learning tasks for enhancing intelligent tutoring systems

What Are the Applications of Emotion Artificial Intelligence?

As with most technological advances, Emotion AI has deviated from its original purpose of giving machines emotional intelligence and is nowadays used in many different industries:

- Marketing

- Insurance

- Customer Service

- Human Resources

- Healthcare

- Education

- Driving Assistance

The advertising industry and the automotive industry have been at the forefront of using Emotion AI for quite some time. Typical examples of achievements come from companies such as Affectiva. Affectiva, based in Boston, MA, focuses on developing Emotion AI for the automotive and advertising industries.

Affectiva's achievements perfectly display how powerful emotion recognition using AI can be: in their research they were able to capture facial information that helped them understand how people reacted to advertising videos, in effect helping brands ensure that their message is reaching their target audience as intended. Affectiva's performance was proven by a study that showed a double-digit increase in sales when using Emotion AI to get positive emotional responses from people.

In the automotive industry, technology that analyzes your face to predict how you feel and even how likely you are to fall asleep behind the wheel may prove to be a great help in reducing the number of road accidents.

How to Use Emotion Artificial Intelligence in Education

In education, Emotion AI has been initially used to help in the education of children with special needs. However, nowadays there is interest to incorporate Emotion AI in many more educational contexts. For example, by analyzing responses during lecture using Emotion AI, educators could determine how well students understand the material being taught.

Furthermore, knowing when students seem to lose focus can help teachers structure their lectures differently, perhaps by using different teaching mediums with higher engagement. This allows for a more personalized approach to teaching, perhaps even allowing teaching platforms to match teachers and students based on learning styles. Looking for signs of frustration in a student's voice can also be very helpful, giving teachers insight into how students feel.

Establishing a good connection between the teacher and the students has always been one of the most important part of the educational process. The outbreak of COVID-19 has made this more difficult. Some information, such as how focused students are, whether they find lectures engaging, whether they need a break etc. is often lost when communicating online.

Maintaining a connection between teachers and students is now more important than ever, and also harder than ever. Emotion AI, using technologies such as eye-tracking, voice analysis, and facial coding, could help teachers optimize each student's learning experience. Emotion AI technology cannot replace teachers, but it can help them, and in situations like these every little bit of help counts.

I must insist on the last point I made above: although Emotion AI technology can help teachers, it can never take their place. To increase the quality of education, researchers and developers should focus on augmenting the work of teachers instead of trying to get rid of them altogether.

How the Outbreak of COVID-19 Helped Achieve Progress in Emotion AI

With a little cooperation between researchers and students, you can use the current situation to collect an enormous amount of data. One of the biggest difficulties when it comes to training machine models is collecting quality data. Without a sufficient amount of quality data, it is impossible to get good results. "Garbage in, garbage out" is a very common saying in the deep learning community.

This is especially important when trying to create AI that is able to distinguish emotions. Due to the broad range of facial expressions, vocal intonations, and special vocal cues (such as word lengthening) that a machine learning model needs to cover, you will need incredibly large and diverse datasets.

This is where the education industry might have some luck. With lessons all over the world being held using web cameras, there is an abundance of data. Theoretically, creating datasets focused only on students and the emotions they show during classes is now possible due to the sheer amount of online classes being held every day.

Students sitting in front of cameras are perfect candidates for data capture, something that would otherwise be very expensive and require students to spend extra time in a lab. Even one of the biggest problems of Emotion AI, cultural differences in how people convey emotions, can be solved, since pretty much the entire world is now forced to use online education.

What Are the Ethical Concerns of Emotional Artificial Intelligence?

I cannot cover the topic of Emotion AI without mentioning the very valid ethical concerns associated with it. At the beginning of this article, I mentioned the growing emotional distance and the degradation of social interaction present in today's society. Even if you accept this position, you must still ask yourself: assuming that you can, for example, augment the capabilities of the teachers, should you actually do it?

The opinion of many is that there is no reason for worry, as long as there are certain regulations. Rosalind Picard, one of the founders of Affectiva and Empatica, believes that already existing regulations can be used to assure the safety of everybody involved. It is of the utmost importance that, even when used, these technologies must be contestable and cannot be the only deciding factor in the decision-making process.

Some others disagree and find that even harsher policies are in order. There have been many debates on the topic and even some controversies: in December 2019 the AI Now research institute called for a ban on emotion recognition technologies in decisions that might heavily impact people's lives. Facial recognition is especially a hot topic, with many activists trying to boycott it. States such as Illinois have gone as far as creating a law that regulates AI-assisted job interview analyses.

Nonetheless, you can't deny that emotion recognition technology is slowly becoming mainstream. The market size for Emotion AI has been steadily increasing, and it is now expected to grow to USD $90 billion by the year 2024.

Some Final Thoughts On Emotional AI

Research done by Gartner suggests that 24% of companies increased their investments in AI since the onset of COVID-19, so it isn't surprising that schools have also started showing interest in artificial intelligence. Standard models of education are rapidly becoming outdated, with online platforms and tools being more popular than ever.

As with most innovations, those who adapt faster will prosper, while those who do not will lag behind. It may be only a matter of time before students learning in AI-enhanced classrooms start showing better results than those who study in traditional classrooms. If that's the case, given the importance of education in our society, it is perhaps to be expected that AI-enhanced classrooms will become the norm in the near future.