Table of Contents

In the previous article, we talked about pixel-level augmentations. While pixel-level augmentations can be very useful from time-to-time, they are often not enough to build a high-quality image augmentation pipeline. This is why you should combine them with spatial-level augmentations. These augmentations are far more complex, but the code you need to use to implement them is almost identical to the one we used in the article on pixel-level augmentations.

- Read previous article in this series: Intro to Image Augmentation: What Are Pixel-based Transformations? >>

- Real-Time Computer Vision with Edge Computing

For now, we'll explain what spatial level transformations are and which spatial level transformations are most commonly used. We will cover building image augmentation pipelines in the next article in this series.

What Are Spatial-Level Transformations

In comparison to pixel-level transformations, spatial-level transformations are much more complex. They often alter the positions of objects in images, so they can't be applied in cases where a particular transformation will change a key aspect of an image. This is especially important when you work on more complex problems such as object detection and segmentation, because in these cases not only do you need to augment an image but you also need to be able to augment its accompanying bounding box, or segmentation mask.

Spatial-level transformations are almost always used together with pixel-level transformations. Whenever you augment your dataset by applying transformations to images, you will always use at least one spatial transformation. The library we are going to use for this is the same library we used for applying pixel-level transformations: the Albumentations library.

There are thirty seven different spatial transformations you can apply using Albumentations. Similar to pixel-level transformations, we won't go in-depth on each transformation. The most popular spatial level transformations can roughly be separated into the following four groups:

- Flipping

- Cropping and resizing

- Rotation

- Complex transformations

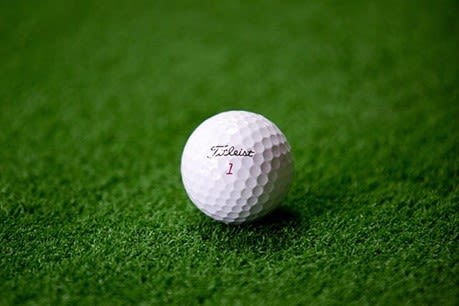

To demonstrate the different transformations, we will use an image of a golf ball.

First, let's import the libraries that are necessary to demonstrate spatial-level transformations:

# Import the libraries we need

import albumentations

from PIL import Image

import numpy as npOnce the libraries are imported, it is time to load the image of the golf ball.

Afterward, the image needs to be converted into an array:

# Load in the image and display it

golf_ball_image = Image.open('golf_ball_image.png')

golf_ball_image

To avoid repeating the same code again and again, we'll create a function that will apply a transformation to an image:

# Create a function for transforming images

def augment_img(aug, image):

image_array = np.array(image)

augmented_img = aug(image=image_array)['image']

return Image.fromarray(augmented_img)Now that everything has been prepared we'll jump straight into explaining the different transformations.

Article continues below

Want to learn more? Check out some of our courses:

How to Flip an Image

Flipping is a procedure where the particular image gets flipped either horizontally, vertically, or both horizontally or vertically. It helps your model learn how to recognize image elements when their orientation in an image changes. There are three main transformations in Albumentations designed for flipping images:

- Flip

- HorizontalFlip

- VerticalFlip

The Flip transformation allows you to choose which type of flipping will get performed, while the HorizontalFlip and VerticalFlip transformations do exactly what their name says. All three are used very often, even though you will mostly see people use HorizontalFlip and VerticalFlip.

I'll demonstrate the transformations. You can get a horizontally flipped version of the original image by running the following code:

# Horizontal flipping

hor_flip = albumentations.HorizontalFlip(p=1)

augment_img(hor_flip, golf_ball_image)

Once you run this code, you will get the following image:

As you can see, the result is similar to what you would get by mirroring the image.

Now let's take a look at the vertically flipped version of the image, which you can get by running the following code:

# Vertical flipping

ver_flip = albumentations.VerticalFlip(p=1)

augment_img(ver_flip, golf_ball_image)The vertically flipped version of the golf ball image looks like this:

These two transformations help your model learn how to deal with similar objects that appear in different contexts in images. If you want to flip an image both horizontally and vertically at the same time, you can use the Flip transformation:

# Vertical and horizontal flipping

ver_and_hor_flip = albumentations.Flip(p=1)

augment_img(ver_and_hor_flip, golf_ball_image)If you run the code above you will get the following image:

Even though it is possible to do both flips at the same time, typically only one is used. It mostly depends on the images you are working with because sometimes a certain flip doesn't make sense.

For example, flipping an image of a castle vertically gives you an image you probably won't run into in real-life scenarios, so there is no benefit to doing so.

How to Crop and Resize an Image

Cropping is one of the most commonly used image-processing operations. It is the process of removing certain unwanted parts of an image. It is a very popular technique used in image augmentation. There is a multitude of cropping transformations offered by Albumentations:

- CenterCrop

- Crop

- CropAndPad

- CropNonEmptyMaskIfExists

- RandomCrop

- RandomCropNearBBox

- RandomResizedCrop

- RandomSizedBBoxSafeCrop

- RandomSizedCrop

From these, the simplest transformation to apply is the Crop transformation. In it, you just specify the coordinates of the section you want to crop by running the following code:

# Simple crop operation

basic_crop = albumentations.Crop(x_min=250, y_min=100, x_max=420, y_max=320, p=1)

augment_img(basic_crop, golf_ball_image)The result you get by running the code above will be an image cropped based on the coordinates you defined. In this case, if we use the previously defined coordinates for cropping, we will end up with the following result:

Do note that cropping will change the dimensions of the image, so make sure to resize it later on if you plan on feeding it to a neural network.

To solve that problem you can use the RandomSizedCrop or RandomResizedCrop transformations. These are what you will probably use most often anyway since all the other options are pretty task dependent, so it is hard to recommend them for every situation. RandomSizedCrop and RandomResizedCrop are the same. RandomResizedCrop is just the Torchvision variant of the RandomSizedCrop operation.

To crop a part of the image, and automatically resize it to the size of the original image you need to run the following code:

# Crop and resize

img_width, img_height = golf_ball_image.size

crop_and_resize = albumentations.RandomSizedCrop(

min_max_height=[100, 300],

height=img_height,

width=img_width)

augment_img(crop_and_resize, golf_ball_image)The result we get from running this code is:

Take into consideration that the procedure randomly crops a part of the image, so even if you run the code above, you might not get the same results. But if you try running the code multiple times, you will probably get a result similar to this image after a few runs.

In practice, setting up this crop properly is relatively hard. Because of the randomness, running it might even lead to crops where the particular object you are trying to display ends up missing from the image.

If you just want to resize an image using Albumentations, without actually cropping it, you can use:

- Resize

- RandomResize

From these two transformations, you will use Resize if you want to precisely define dimensions. RandomResize transformation will resize your input image into some other, randomly picked dimensions.

We'll demonstrate how to resize an image:

# Resize the image

img_width, img_height = [512, 1024]

resize = albumentations.Resize(img_height, img_width, p=1)

augment_img(resize, golf_ball_image)Running the code above will result in the following image:

How to Rotate an Image

Image rotation is very commonly used for image augmentation. The procedure is very simple: you take an image and rotate it to teach your model how to recognize objects in the image even if their orientation changes. For rotation, Albumentations offers the following transformations:

- RandomRotate90

- Rotate

- SafeRotate

- ShiftScaleRotate

Of the above, the one that is easiest to use is ShiftScaleRotate. This is also the transformation used most commonly. The transformation Rotate allows you to be exact in how you want to rotate something, but that is not a good idea because you don't want to manually specify just one rotation angle. RandomRotate90 rotates the input randomly by 90 degrees, so it does include some useful randomness, but it is also limited to rotating images for 90 degrees.

The reason why using ShiftScaleRotate is preferred is that, by using it, you perform 3 affine operations randomly: translation, rotation, and scaling. This way you get a lot of different versions of your image that you can feed into the model.

We'll demonstrate how it works by running the following code:

# Perform affine operations

shift_scale_rot = albumentations.ShiftScaleRotate(p=1)

augment_img(shift_scale_rot, golf_ball_image)By running the code above you get a randomly modified image. In our case, it looks like this:

How to Do Complex Transformations on Images

There is a separate class of highly complex transformations that find limited use. They heavily change the original image, so if the model depends on noticing subtle details, these complex transformations might cause big problems. I won't go over them in detail, but let's demonstrate how a complex transformation modifies the image using the ElasticTransform operation available in Albumentations.

ElasticTransform is a method that combines random affine transformations with the application of local distortion. The result is an image that is very different than the original image.

We'll demonstrate by running the code below:

# Complex image transformation: ElasticTransform

el_transform = albumentations.ElasticTransform(alpha_affine=105, p=1)

augment_img(el_transform, golf_ball_image)In the code above, I cranked up the parameters for demonstration. The image of the golf ball is very simple, so parameters that would typically cause problems don't cause them in our case, but with cranked parameters, this is what we get:

Once again, there is some degree of randomness involved, but as you can see in the image, the image of a single golf ball has suddenly been transformed into an image that shows three deformed golf balls. So if you are not familiar with this transformation, and similar transformations, be sure to either read their documentation in detail or just avoid using them in your image augmentation pipelines.

- What is MLOps: An introduction

- How to Build CI/CD Pipelines Using AWS SageMaker

- What Are the Most Popular AI and ML Tools?

In this article, I built upon the previous article in this series, which talked about the basics of image augmentation and pixel-level transformations. This article covered everything you should know about spatial-level transformations. We first explained what spatial level transformations are. Then, we mentioned the most common ones and demonstrated how to use them to augment images. In the next article in this series, we will look at how to combine transformation into image augmentation pipelines.